The Need for AI Agent Identity Management: Closing an Enterprise Blind Spot

Guy Tytunovich

|Bot & AI Agent Trust Management | November 12, 2025

The enterprise is facing a growing blind spot created by the rapid rise of AI agents that now conduct research, make decisions, and transact throughout the digital buyer’s journey.

In her recent research, Act Now: Implement Comprehensive AI Agent Identity Measures to Navigate Automation Trends, Gartner Distinguished VP Analyst Avivah Litan warns that traditional identity, access, and automation controls cannot recognize or govern these new autonomous actors.

The solution is a new discipline called AI Agent Identity Management, which focuses on identifying, verifying, and governing machine-led interactions across the enterprise.

CHEQ was recognized in this research for our innovation in detecting and classifying Anonymous Web-Interfacing AI Agents, a use case Gartner highlights as one of the most urgent and complex challenges now confronting enterprises today.

Understanding Anonymous Web-Interfacing AI Agents

Anonymous Web-Interfacing AI Agents are autonomous or semi-autonomous software entities that access public-facing websites and services without disclosing their identity or origin.

These agents are transforming the web, reshaping the digital buyer’s journey and expanding enterprise threat exposure. Some operate within authorized boundaries, acting transparently on behalf of legitimate users who have explicitly approved their actions. Others act without disclosure, scraping, impersonating, or manipulating systems for financial or operational gain.

- Good agents operate transparently, within approved parameters, and on behalf of a legitimate human or organization that has granted permission. This might include shared credentials, limited transaction authority, or defined access rights.

- Bad or rogue agents operate without consent or control, often exploiting automation to evade detection, drain resources, or distort outcomes.

The challenge is no longer simply blocking automation but understanding intent and authorization. Enterprises must identify which agents belong, which do not, and have the right controls in place to govern or mitigate the actions of both.

Why Legacy IAM and Bot Mitigation Can’t Keep Up

Today’s critical blind spot exists because identity systems and automation defenses were built for different eras.

Traditional Identity and Access Management (IAM) systems were designed for humans. They assume credentials, static permissions, and predictable lifecycles. These systems cannot meet the dynamic, high-velocity nature of AI agents. Passwords, MFA, and directory-based access models break down when facing autonomous entities that appear and disappear in milliseconds.

Legacy bot mitigation tools struggle for a different reason. They were built to stop scripted, repetitive behavior, not adaptive intelligence. Traditional approaches focus on speed, volume, or headless browsers and fail to understand intent. AI agents can mimic human behavior, adapt tactics, and collaborate across systems, rendering static rules obsolete.

To close this blind spot, enterprises need agent-aware systems that continuously interpret context, identity, and behavior across every digital interaction. Gartner predicts that by 2029, 75% of enterprises will deploy agent-aware systems capable of interacting with machine identities, mitigating AI-driven risks, and supporting beneficial agent activity.

The arrival of agents requires a reexamination of how detection and verification work. AI Agent Identity Management builds on these foundations, introducing new layers of behavioral, contextual, and identity intelligence that legacy tools were never designed to handle.

From Bot Detection to AI Agent Intelligence

At CHEQ, we see AI Agent Identity Management as a natural extension of the detection and intelligence work that began with bot management. Bot detection remains essential to protecting the digital ecosystem, but the rise of autonomous, adaptive agents introduces new dimensions of behavior, context, and identity.

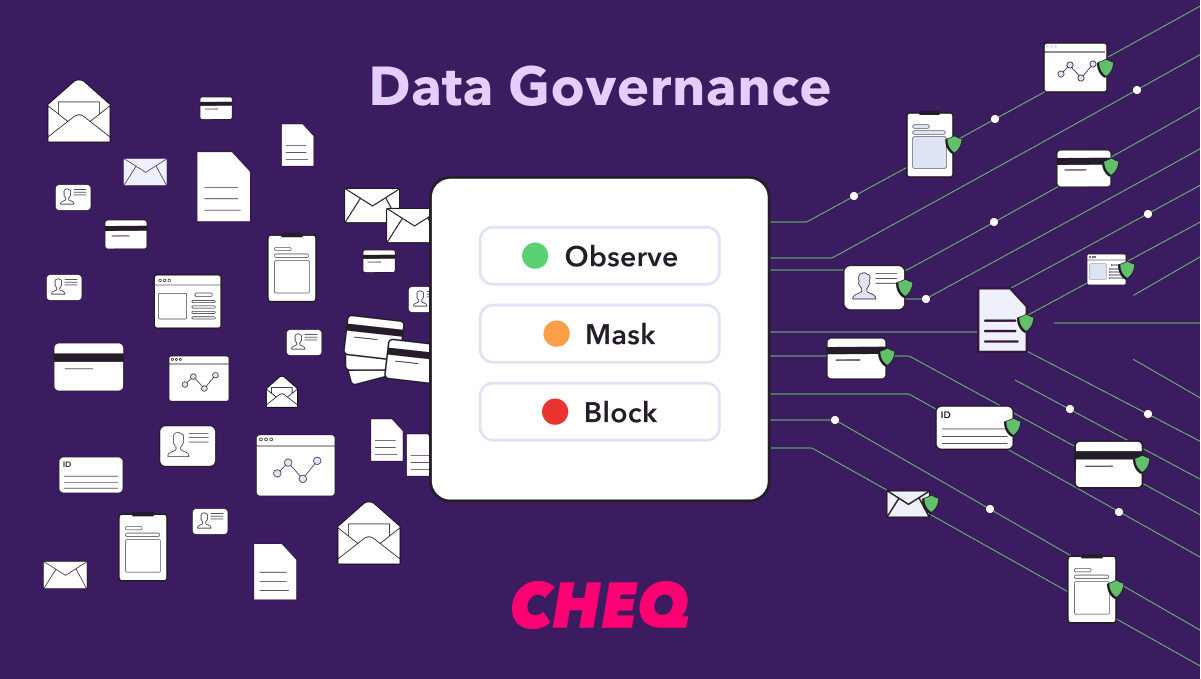

While both disciplines share a foundation, they interpret automation differently. Bot detection focuses on identifying patterns of scripted or repetitive behavior, while agent detection requires understanding autonomous systems that act dynamically and contextually.

The table below highlights how our approach differs across key detection aspects and how AI Agent Identity Management expands upon the foundations of advanced bot mitigation.

Distinguishing Between Bots & AI Agents

As automation evolves into autonomy, detection must evolve with it, ensuring every interaction—human or not—is visible and governed.

Where AI Agent Identity Management Is Headed

The next phase of AI Agent Identity Management will focus on connecting identity, behavior, and trust across both humans and their agents. As the web becomes more autonomous, enterprises need intelligence that can see beyond automation and understand the relationships behind every interaction to know which to trust.

- Linking users and their agents. Building persistent behavioral baselines that connect verified humans with their authorized AI agents, enabling traceability and trust.

- Verifying agent authenticity. Validating credentials, declared or commercial signals through network-level interconnectivity and behavioral patterns that confirm legitimate use.

- Evaluating intent and legitimacy. Moving from simple detection to understanding the reason behind activity, distinguishing beneficial automation from abuse or manipulation.

- Affirming trust dynamically. Establishing multi-layer trust assessments that adapt over time, recognizing AI agents as valid actors that require verification and ongoing affirmation rather than outright blocking.

This is where AI Agent Identity Management is heading, toward continuous verification, contextual understanding, and dynamic trust.

The Enterprise Imperative

AI agents are already present throughout the buyer’s journey for most enterprises. In some industries, they’re already transacting, and the balance is shifting fast.

Enterprises that cannot identify or govern AI agents engaging with them face escalating risk. Beyond data exposure, the consequences can include financial loss, reputational damage, consumer harm, and distorted insights that erode trust and undermine decision-making across the business.

At the same time, the opportunity is enormous. The enterprises that implement AI Agent Identity Management will be best positioned to stay ahead, move faster, build deeper trust with customers, and unlock new growth by enabling legitimate, value-creating agents to operate safely and at scale.

The need for AI Agent Identity Management is now coming into focus. As AI agents take a more active role across the digital economy, enterprises must evolve how they see, understand, and govern automation. Identity, once managed for humans alone, now extends to the autonomous systems acting on their behalf.

The organizations that act early will define the standards of trust for this new era. They will be the ones that can confidently welcome beneficial agents, prevent misuse, and turn automation into advantage.

In the age of AI, trust is not assumed. It’s built through visibility, verification, and intelligent control.