How Web Scraping Attacks Impact Businesses – And How You Can Stop Them

Jeffrey Edwards

|Cyber Risks & Threats | November 10, 2022

In this post, we break down the various forms of web scraping and go over ways you can mitigate and prevent damage to your business.

Contents:

Why do Bad Actors Scrape Web Content?

What Kind of Content do Scraping Bots Target?

How Does Web Content Scraping Hurt My Website?

How Can I Detect Web Content Scrapers?

How Can I Block Web Content Scrapers?

What is Web Scraping?

Web scraping is the process of extracting content and data from a website. Many digital businesses, like search engines, price comparison tools, and market research companies, use web scrapers for legitimate purposes, but they’re also used by bad actors for malicious purposes.

[Want to see how much fake traffic affects your ads? Get a free invalid traffic scan.]

Web scraping can be done manually, but it’s typically performed by automated bots that are programmed to recognize and extract specific data, either from the website’s underlying HTML or from connected databases and APIs. Some bots may also create fake accounts to gain deeper access to a site. Malicious web scraping can be used to build fraudulent websites, to steal competitive information, or simply to create duplicate sites for ad fraud purposes.

‘Good’ and Bad Scrapers

As noted above, there are thousands of legitimate scrapers online. These bots are easy to tell from invalid traffic because they identify themselves in the HTTP header and will follow the directions of your site’s robot.txt file, which tells a bot what it can and cannot do on your website. Malicious scrapers, however, will usually employ a false HTTP user agent, and disregard your robot.txt file–they’re after anything they can get.

Why do Bad Actors Scrape Web Content?

People scrape web content for a variety of reasons. An attacker could use a site’s content in an effort to copy content and usurp its search engine rankings or duplicate the look and branding for fraud purposes. Hackers could also use content scraping to create phishing sites and fake advertising campaigns to trick users into entering personal information.

Plagiarism

A common reason for malicious web scraping is that hackers or scammers need to fill a site with content. We know, of course, that as soon as Google detects it, it labels it as duplicate content, and that could damage the organic search rankings of both the original site as well as the copied site, but for hackers, this is a moot point, as the purpose of the site is likely to carry out ad fraud schemes or deliver malware to visitors.

Fake eCommerce Stores

Another reason hackers scrape websites is to create fake eCommerce sites and try to steal users’ payment information. They can use bots to scrape all the content from your site–product descriptions, prices, and blog content–and then re-create the store, either with your branding intact or with their own. Unsuspecting visitors to this bogus site may attempt to buy “your” product for a great price and, instead, will either receive a low-quality rip-off or nothing at all. Worse yet, the shopper’s payment info may be stolen and sold on the dark web.

Fake Ads

Another way to use scraped content is for ad fraud. That’s when a fraudulent publisher uses popular content on their site and then runs ads on it. The publisher then directs bots to click on these ads, generating bogus ad income for themselves.

Price Scraping

Sometimes a company can download all the pricing information of a competitor in order to adjust their own pricing. It’s a tactic that helps companies stay competitive, and it’s probably the most benign on our list.

What Kind of Content Do Scraping Bots Target?

Bots can get all kinds of content off of your website. It could include text, images, HTML code, CSS codes, product prices, and much more. In a worst-case scenario, web scrapers could even collect improperly stored consumer personally identifiable information (PII).

How Does Web Content Scraping Hurt My Website?

Web scraping attacks can do massive damage to a brand’s reputation, website performance, and security, and even to SEO results.

SEO Rankings

If you’ve ever owned a site, you’ve probably seen spammy pages that copy entire blog posts of yours and even have the audacity to link back to your blog. That’s at the low end of the spectrum. An occasional copy of a post likely won’t hurt your site, but if someone copies your content on a large scale, it can really hurt your rankings. Google can label it as duplicate content, and it may even lead to a penalty.

Site owners can mitigate these scams by disavowing links, using canonical tags, and contacting copycats directly to ask them to take the duplicate content down, but the best defense is to block illegitimate scraping in the first place.

Reputational Damage

Content scraping can seriously damage your reputation especially if you have an eCommerce site. If your users get routed to a fake store that looks just like yours and finds out that it was a scam they can lose trust in your brand and never return.

Website Spoofing

Perhaps the worst damage that could happen from content scraping is when a hacker re-creates your site and steals shoppers’ payment info. They could create elaborate phishing schemes to disguise themselves as real businesses and can get your business banned from various platforms and marketing channels you’re using (like affiliate platforms and eCommerce marketplaces) if scams are misattributed to you.

How can I detect web content scraping?

There are several ways you can detect content scraping, both manually and automatically.

Do a manual search

The simplest way to find duplicate content is to do a manual search on Google for the title of a particular post. You may find some duplicates popping up in the search results. You should do that if you notice an unusual change in your traffic or engagement levels.

The problem is that it could take you hours to go through all the pages on your site, and that’s why you should use a tool that automates the process. Here are some of these tools.

Pingbacks on Internal Links

Platforms like WordPress and Wix have a feature called pingback, where you get a ping every time a page links to your site. It can help you detect any unlawful content scraping and quickly deal with it.

Google Alerts

Google alerts are another great way to catch scraped content. These alerts notify you of any site on the web that links to your content, and it’s a great solution regardless of the CMS you use for your site. What’s really convenient is that you can set up these alerts to email you when a new link gets added.

Keyword Tools

If you’re heavy on SEO and publish a lot of content, then you should already be using keyword tools like Ahrefs or Semrush. These tools show you every single site or page that links to your content. You can filter by domain authority to spot spam sites. And then, it’s easy to decide if you should disavow the link or contact the site owner. You could also set up automatic reports that get sent to your email weekly or monthly, which makes it easier to stay on top of things.

Webmaster tools

Another great way to detect content thieves is by using your webmaster tools. Look up all the links your site gets and then sort them by the linked pages’ column. If you spot a site that’s in an unrelated niche or category and has an unusual amount of pages that link back to your content, then you may have found a culprit.

How can I block web content scrapers?

The techniques outlined above can help you get a leg up on basic web scraper attacks and simple threat actors; malicious scraper bots — even those commercially available to competitors– are increasingly sophisticated and able to subvert basic detection techniques and masquerade as legitimate traffic.

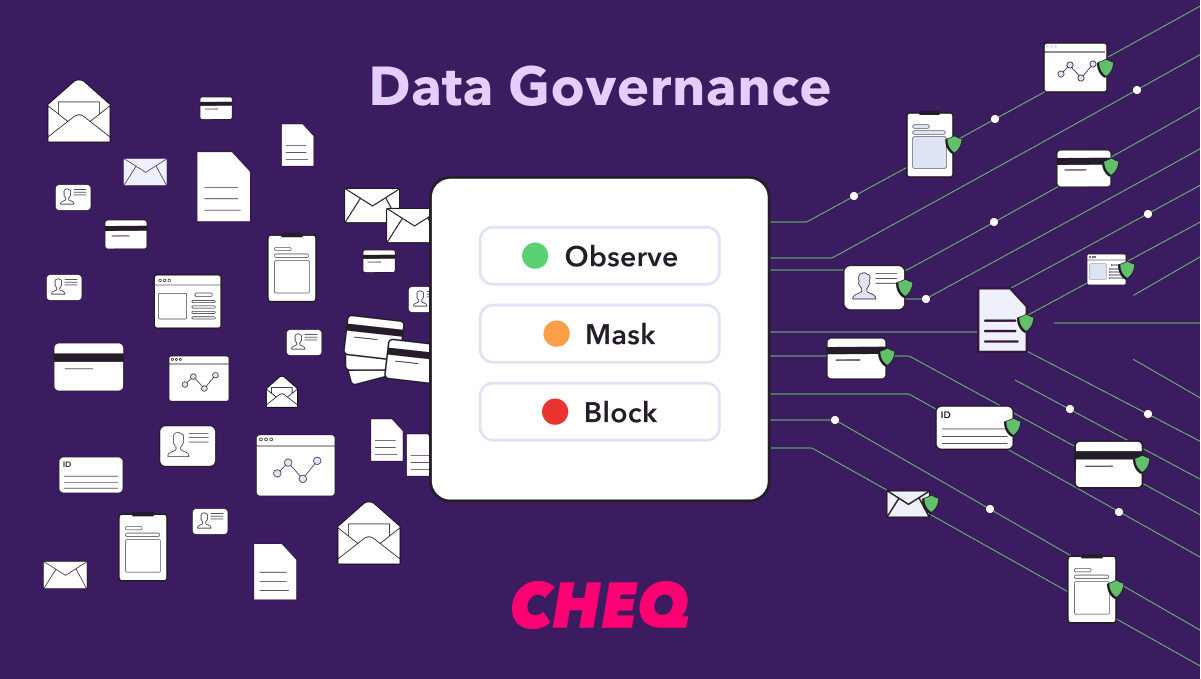

For businesses serious about protecting their online properties, a comprehensive go-to-market security platform will help automatically detect and block invalid traffic in real-time.

CHEQ leverages thousands of security challenges to evaluate site traffic in real-time, determine whether a visitor is legitimate, suspicious, or invalid, and take appropriate action in blocking or redirecting that user.

Book a demo today to see how CHEQ can help protect your business from web scrapers and other threats.