Which technologies can be used to maximize bot mitigation besides blacklisting

CHEQ

|Website Ops & Security | August 01, 2022

Author: Ram Valsky

The ‘Tech CHEQ’ series offers internal expertise from CHEQ’s cybersecurity team.

Traditionally, security teams have commonly used blacklisting to secure their networks and environments. This makes sense to some systems that are designed to allow as many people as possible to use them. In these, by default, only users who are determined to be a threat are blacklisted. The presumption here is that every user is safe or “good to go” unless the opposite is specified. Some examples of security application systems using blacklists are:

- Antivirus: that protects the end user from malicious software downloaded from the internet.

- Firewall: that inspects all data packets entering or leaving the network and decides whether to allow it or not.

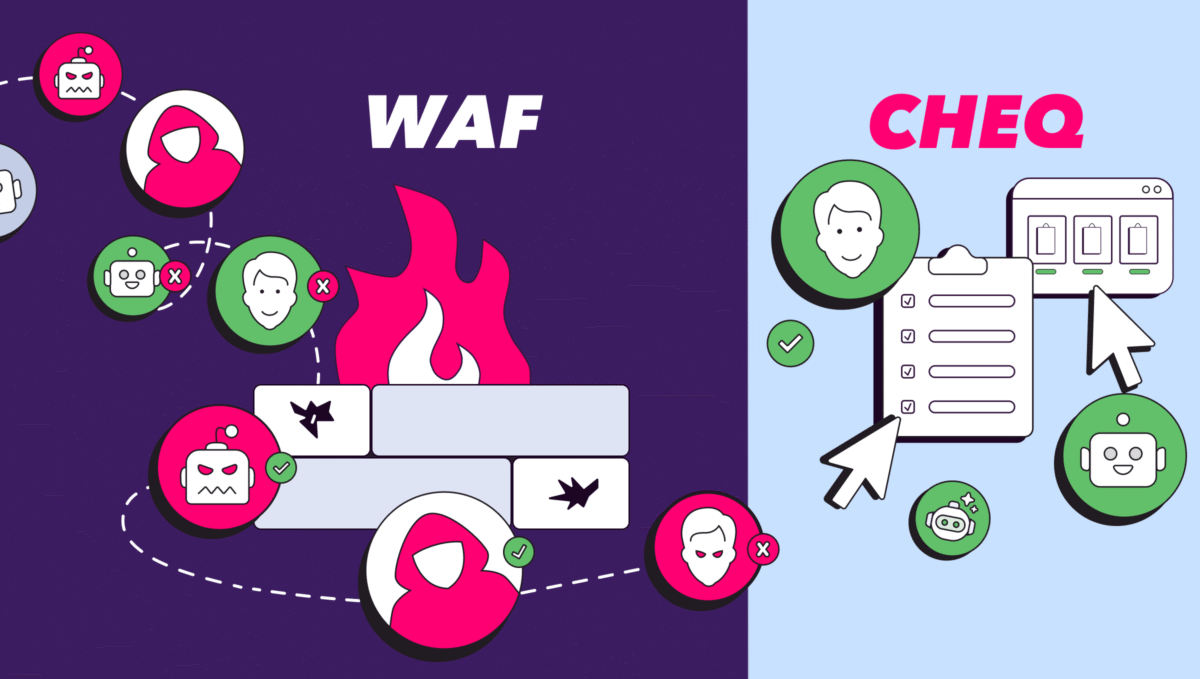

- WAF: application-level firewall, that focus on threats on HTTP such as breaking authentication attacks, XSS, and SQL injections.

In this sense, blacklisting can be an effective strategy for cases where it is easier to define the threats and measure what to block, just like the ones mentioned above. But when you need to protect your website and secure your marketing campaigns from bots, fake users, and other automation tools, using a blacklist as your main strategy is not the right approach, because, over time, threats and fraud schemes attacking Go-To-Market teams have become way more sophisticated.

What are blacklists

As the name indicates, blacklists are lists of malicious entities fingerprints, or illegitimate activities which are used, for example, by security applications and fraud prevention companies to block access from those malicious actors into their network or web. These entities are selected beforehand based on different security definitions that can be adjusted over time.

The use of blacklisting for mitigating malicious bots in fraud prevention

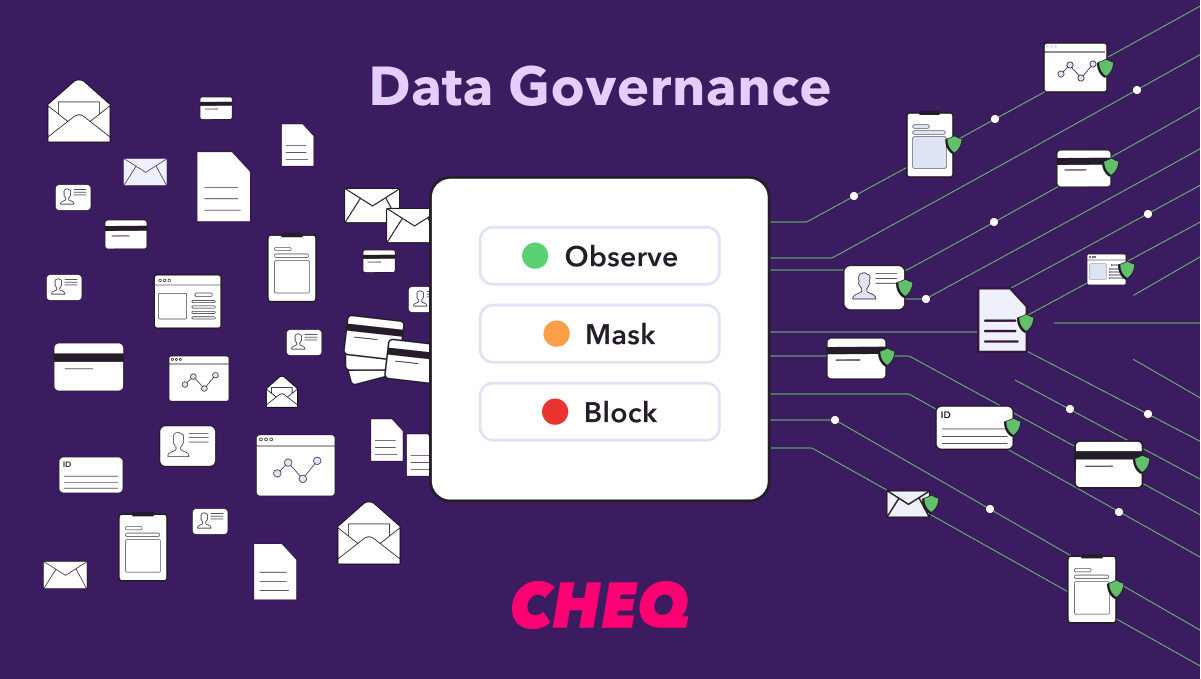

In fraud prevention, there are multiple types of blacklists offered as measurement against malicious activity, such as IP addresses, email addresses, as well as domain-based blacklists. These blacklists are called “feeds” and they are usually offered by multiple blacklist providers. Some are freely available for private use, such as blocklist.de, Firehol, Spamhaus while others are paid.

As you could expect, there are many differences between those feeds, such as the type of access they provide, the data format, their goal, the data collection methodology, and how often the data is updated and reevaluated. All of these variables are critical because each blacklist is constructed for different purposes, using different methods and signals from various sources on the network. These sources usually arrive from endpoint security software like antivirus companies, data from routers, network security and infrastructure companies, DNS and SMTP servers, as well as data from web security software.

However, even though broadly used in the industry by some security companies, blacklisting has become way less efficient in the last few years to prevent fraud and provide effective bot mitigation.

The downsides of blacklisting

1- Size vs accuracy and relevance:

As they come from all these different sources, some providers take pride in the size of their blacklists, because, in theory, the size would show how many threats were identified and, therefore, how effective the blacklists are. But the truth is that the number of threats specified on the lists doesn’t matter that much. In fact, the accuracy and relevance of the threats, as well as the use cases in which they were evaluated matter the most, especially when it comes to stopping bots.

2- Lack of customization and false positives:

For bot mitigation, regardless of how many threats are specified, blacklists alone are simply not effective nor relevant. Not only do they represent a “yes or no” answer to security, providing high false positive feedback, but they also lack in providing customization and optimization for the customer’s web assets. This is caused by insufficient metadata, the division into categories, and unknown data collection methods, which, ultimately, leads to a misunderstanding of how relevant the threat source is to each use case.

3 – Unique identifiers are not sufficient:

Another crucial problem with blacklisting is the use of unique identifiers. Commonly used for bot mitigation and fraud prevention, unique identifiers such as IPs are used by several security systems as they assume blacklisting a threat by the location will reduce risks and help businesses not be flooded with unwanted traffic from that specific region.

But, taking IP as an example, most attackers today use VPNs, proxies, and IP rotation to launch their attacks while hiding their identities, so blacklisting IPs is not a good enough strategy to block attacks. In fact, today, the use of residential IPs for bots is very common, as attackers know that residential ASN block is not a regular practice for organizations, because, on many occasions, this can hurt their businesses, making it harder for people to reach their websites, as well as making user experience worse.

In this sense, instead of basing detection on lists, to efficiently provide security, teams need to improve their capabilities to detect threats in real-time.

4 – Static and Semi-Static feeds

Some of the providers guarantee the frequency in which they update the feeds, while others don’t. This leads to static or semi-static feeds, that is, feeds that are not updated over time as they should. In addition to that, the methods used to reevaluate the data in these feeds are usually unknown. This, by itself, provides a lack of certainty that businesses cannot sustain while maintaining security.

5 – Unknown feed sources

The sources of these feeds are also usually unknown. As different vendors tend to reuse the same sources over time, eventually, these feeds become very “dependent” one on another. As a result, many of the feeds will contain the same information, causing an unwanted use of false data, which increases the risk of successful attacks coming through, as well as it increases false positive feedback.

So, what our technology team does to maximize security?

As our experience proves, in most cases, blacklisting using tactics like blocking IPs won’t stop the attacker. In this sense, different strategies need to be put into practice to provide more accuracy, as well as customization and optimization to the client, so businesses can be secured from threats and attacks coming from malicious bots. To reach this, our technology team continuously improves detection mainly based on two points:

1- Identifying which automation tool the attacker is using:

This is possible through javascript fingerprinting, browser and various communication layers fingerprinting. Our web & network technology experts know how to detect every browser, and are able to identify every new browser version.

2- Identifying bots by their behavior:

With this approach, regardless of the country, region, ISP, or which parameter he spoofs, we can still identify the attack by using our behavioral detection, statistical-based engine, and our machine learning algorithms.

Of course, this is not to say that blacklisting has no value. It is true that the addition of any external signal such as the belonging of the IP to a list of VPN/Proxy or hosts infected with malware can increase the confidence in the detection. However, it cannot be the foundation of a security strategy. Instead, we are sure that combining these capabilities is the real key to mitigating the most sophisticated bots with great accuracy, as well as having low false positives and false negatives.

Want to protect your sites and ads? Click here to Request a Demo.