How to Identify and Block Web Scrapers

Jeffrey Edwards

|Cyber Risks & Threats | April 24, 2024

Have you ever wondered how companies gather all the data they use for their research or analysis? Or how price comparison tools can see what every product from every retailer costs? Often, these businesses use tools known as web scrapers, which are bots designed to extract data from websites and applications automatically.

These tools are often used for legitimate purposes, such as the aforementioned price comparison tools, but they can also be used for malicious activities like stealing content, compromising user privacy, and conducting fraudulent activities. And–regardless of intent–web scrapers can have adverse effects on site performance.

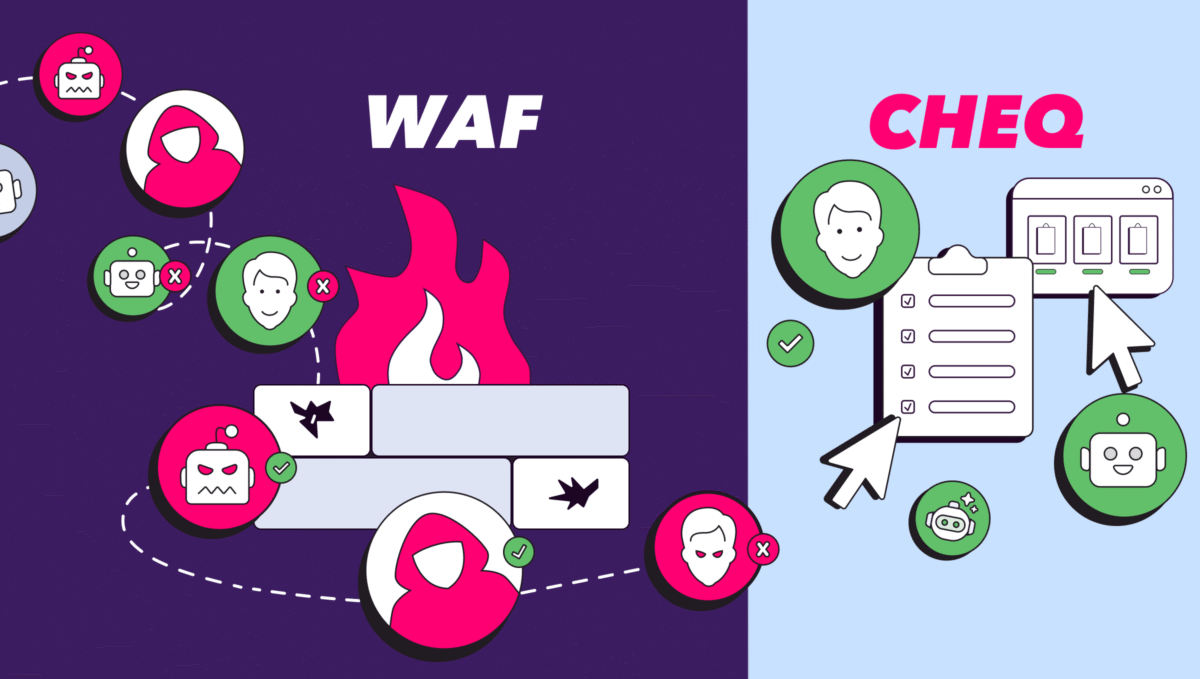

That’s why many site owners attempt to block web scrapers from their web pages entirely. Of course, a dedicated bot detection and mitigation tool, such as CHEQ, will be able to identify and block suspicious and malicious traffic of all kinds in real time, but there are some manual techniques available for those who want to tackle blocking simple scraper bots on their own.

What is a Web Scraper?

A web scraper is a program or script designed to extract data from a website without any intention of providing value or benefit to the website owner or its users. Web scrapers are often used for unethical purposes, such as stealing content, copying product descriptions or prices, or harvesting email addresses for spamming. They can also be used for legitimate purposes such as data mining and research, but only if the website owner has permitted the scraper to access and use their data.

How Do Web Scrapers Work?

Web scrapers work by parsing through the website’s HTML code and extracting the data targeted in their parameters.

The complexity of a scraper depends on factors like the website’s structure and the type of data to be extracted, but typically, they’re relatively simple programs.

For non-technical users, there are various open-source web scraping libraries available, which can simplify the process, and even full-on scraper-as-a-service programs that let users build simple web scrapers via a GUI–no code necessary. These tools are available free for limited use or at low cost for use at scale.

What Are the Legitimate Use Cases for Web Scrapers?

Of course, the reason scraping tools are so commonplace and widely available is that scraping, when done ethically and correctly, is perfectly legal and is performed billions of times a day for thousands of different reasons. These scrapers are easy to tell from invalid traffic because they identify themselves in the HTTP header and will follow the directions of your site’s robot.txt file, which tells a bot what it can and cannot do on your website. Malicious scrapers, however, will usually employ a false HTTP user agent and disregard your robot.txt file–they’re after anything they can get.

Some legitimate use cases for web scraping include market research, data analysis, content aggregation, and SEO research. Of course, just because a scraper isn’t malicious doesn’t mean it won’t use your website’s resources and potentially impact performance. If you’re inundated with scrapers and performance is suffering, the best practice is to block the offending scrapers, regardless of intentions.

What Are the Malicious Use Cases for Web Scrapers?

Unfortunately, web scrapers are also frequently used for malicious purposes, including content theft, price scraping, ad fraud, and even more complex attacks like account takeover and credential stuffing. These attacks can have a significant impact on website owners and users, ranging from increased server load, lowered SEO rankings, and reputational damage, to privacy violations, plagiarism, and cyber theft.

Blocking Simple Web Scrapers

As mentioned above, web scrapers can do significant damage to a website, and regardless of their intent, often make a poor guest, leading many site owners to block them entirely. For legitimate scrapers, this is a simple process: simply update your robot.txt file to instruct scrapers not to parse your data. Of course, not every scraper is honest, and truthfully, this approach won’t do much to stem the flow of illegitimate bots. So what next?

A dedicated bot detection and mitigation tool like CHEQ will be able to identify and block suspicious and malicious traffic of all kinds in real-time, but for users looking for a manual solution, there are some simple techniques that will block simple bots. However, these techniques require a basic knowledge of website and network administration, and it’s important to make sure that you back up important files at every stage of the process so that you can easily turn back if you make a mistake.

It’s also important to note that these techniques may be effective for simple bots, but sophisticated bad actors are well aware of these approaches to bot mitigation and have developed workarounds. With that said, let’s examine some simple methods for blocking bots.

Stopping Web Scrapers with IP Blocking

The first and most common method of bot mitigation is simple IP blocking, a simple and effective method to block traffic from known or suspected scrapers. IP blocking is relatively simple to set up, but it’s important to be selective with your blocking to avoid blocking legitimate users.

Identifying Suspicious IP Addresses

To start, you need to identify IP addresses used by web scrapers. This can be a tedious task, but there are several methods and tools that can help. Here are some ways to identify IP addresses used by web scrapers:

Examine your server logs: Web servers typically maintain logs of all incoming requests, including the IP address of the requester. By analyzing your server logs, you can identify IP addresses that are making an unusually high number of requests or requests that are outside the typical range for a human user. Tools like AWStats and Webalizer can be used to analyze server logs and identify IP addresses associated with web scrapers.

Conduct network traffic analysis: Network traffic analysis tools are used to capture and analyze network traffic in real-time, and can be set up to alert for suspicious activity. By monitoring network traffic and building a baseline of usual activity on your network, you can identify patterns of behavior that are consistent with web scraping activity, such as multiple requests for the same resource in a short period of time.

Use an IP address reputation service: IP address reputation services such as Project Honey Pot and IPQS can provide insight into the reputation of specific IP addresses. These services use a variety of techniques to identify IP addresses associated with spamming, malware, and other malicious activity.

Implementing IP Blocking Rules

Once you’ve identified a range of IP addresses affiliated with web scrapers, the next step is to implement IP blocking rules to keep those addresses off of your website. This can be done either in your server or firewall settings, either via a command line interface, for example, in an nginx or Apache web server or via a GUI in some CDN and firewall applications.

Testing and Refining IP Blocking Rules

After implementing IP blocking rules, you should test them to ensure that they are working as intended. You may need to refine the rules over time as new IP addresses associated with scrapers are identified.

One way to test IP blocking rules is to monitor traffic logs and analyze which IP addresses are being blocked. This can be done by reviewing server logs or using analytics tools that provide real-time traffic analysis. If legitimate users are being blocked, you may need to refine the IP blocking rules to allow access from those IP addresses.

Another method is to use testing tools to simulate traffic from different IP addresses and test the effectiveness of IP blocking rules. For example, you can use a free tool called Jupyter Notebook (formerly iPython Notebook) to write a loop that sends multiple HTTP requests to your website from different IP addresses–both blocked and unblocked–and analyzes the responses to see if your blocking is functioning as intended. Other tools like cURL, and Wget can also be used in a similar manner.

Blocking Web Scrapers with User Agent Filtering

Another common technique that can be used in combination (or independent of) IP blocking is User Agent Filtering, in which websites identify and filter user agent strings of common scraping tools. This can be done using server-side software that examines the user agent string and blocks traffic from known scraping tools. However, the effectiveness of this method is limited to simple bots, and it can be bypassed by scrapers that use custom user agent strings.

How to Identify Common Scraper User Agents

Before implementing user agent filtering, you need to identify the user agents used by common scraping tools and bots. This process is similar to the process outlined above for identifying IP addresses and can be done by analyzing server logs or using online resources that provide lists of known scraping user agents.

There are several online resources that provide lists of known scraper user agents, such as the User Agent String.Com database or the Wappalyzer browser extension. These resources can be used as a starting point for identifying common scraper user agents.

User agent analysis tools can also be used to analyze user agent strings and identify common scraper user agents. For example, the User Agent Analyzer tool from UserAgentString.Com allows you to analyze user agent strings and identify the device type, operating system, and browser being used.

In some cases, though, it may be necessary to conduct manual testing to identify common scraper user agents. This can be done by using scraping tools or bots to simulate traffic to your website and analyzing the user agent strings in the outgoing requests.

Implementing User Agent Filtering

Implementing user agent filtering can either be done at the network edge via a load balancer, firewall or CDN or at a lower level on the web server. Many CDN or firewalls will provide a GUI for user agent filtering, but on a web server, the process typically involves editing your web server configuration file. The exact method varies by service, so it’s important to consult the documentation for your specific web server before proceeding. The steps below outline the process for setting up user agent filtering on an Apache web server, but you can find instructions for an nginx server here.

User Agent Filtering on an Apache Web Server

To start, it’s important to make a backup of your server configuration file so that you can go back easily if you make a mistake.

Once you’ve backed up your config file, you can start by editing the web server configuration file with the following lines to block traffic from specific user agents:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} baduseragent [NC,OR]

RewriteCond %{HTTP_USER_AGENT} otherbaduseragent [NC,OR]

...

RewriteRule .* - [F,L]

Replace “baduseragent” and “otherbaduseragent” with the user agents that you want to block. The “NC” flag indicates that the match should be case-insensitive, and the “OR” flag indicates that the match should be applied if either of the conditions is true. The “F” flag in the RewriteRule indicates that the request should be forbidden, and the “L” flag indicates that the rule should be the last one processed.

Once you’ve made your changes, save and exit the configuration file, and then proceed to test your new rules by accessing your website using a user agent that matches one of the blocked user agents. If the rules are working, the request should be blocked and return a 403 Forbidden response.

Blocking Scraper Bots with CAPTCHA Challenges

If all else fails, CAPTCHA challenges – those annoying tests that ask you to prove you’re human by identifying stop signs or busses – can be used to block scraper bots by requiring users to complete a challenge that is difficult for bots to solve, but relatively easy, if frustrating, for humans to solve.

To use CAPTCHA challenges to block scraper bots, you can integrate a CAPTCHA provider into your website’s login or registration forms, contact forms, or other areas that are vulnerable to scraping. The CAPTCHA challenge will be displayed to users who attempt to access these areas, and the challenge must be completed before the user can proceed. Some CAPTCHA providers will also examine user behavior and limit challenges to users they determine suspicious.

CAPTCHAs have several advantages, including relatively low maintenance and ease of use, but they can also negatively impact user experience and increase rates of page abandonment, particularly when employed on landing or login pages.

Advanced bots are also able to bypass CAPTCHA challenges by imitating human behavior or by farming out CAPTCHA challenges to CAPTCHA farms, where human workers are paid to solve CAPTCHA challenges on behalf of the bot for fractions of a penny.

To prevent bots from bypassing CAPTCHA challenges, it’s important to regularly update your CAPTCHA provider and to use other techniques like rate limiting and IP blocking to complement CAPTCHA challenges.

Shut Out Scraper Bots with CHEQ

The techniques outlined above can help you get a leg up on basic web scraper attacks and simple threat actors; malicious scraper bots–even those commercially available to competitors– are increasingly sophisticated and able to subvert basic detection techniques and masquerade as legitimate traffic.

For businesses serious about protecting their online properties, a comprehensive go-to-market security platform will help automatically detect and block invalid traffic in real time.

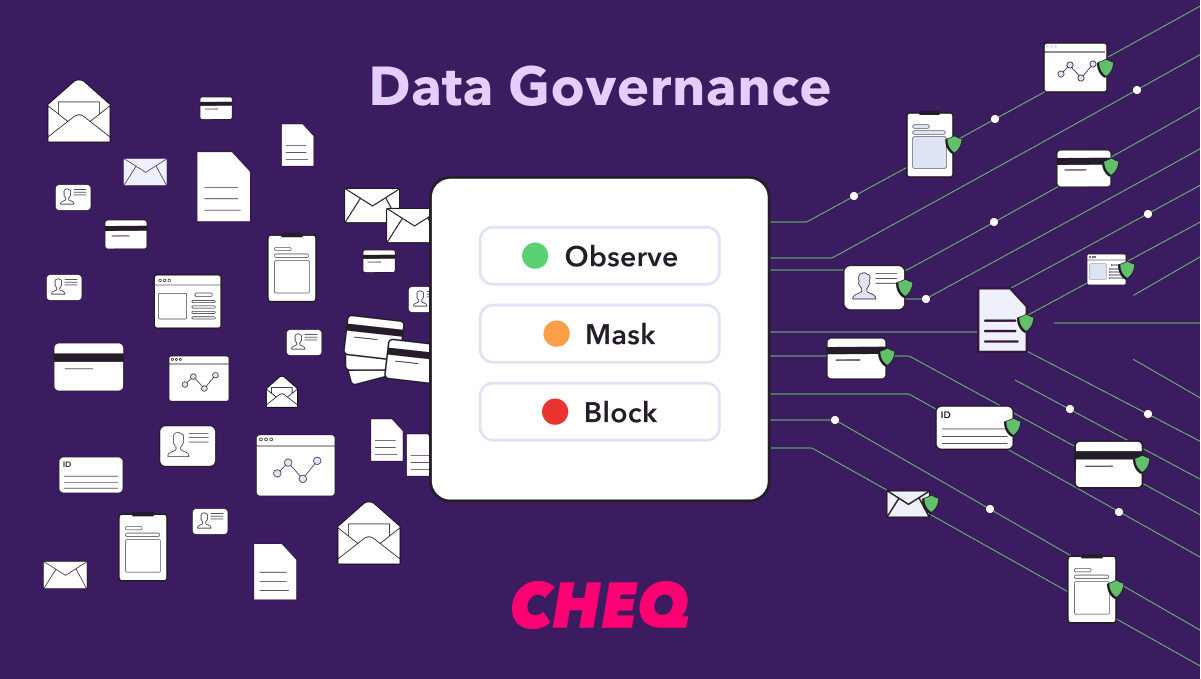

CHEQ leverages thousands of security challenges to evaluate site traffic in real-time, determine whether a visitor is legitimate, suspicious, or invalid, and take appropriate action in blocking or redirecting that user.

Book a demo today to see how CHEQ can help protect your business from web scrapers and other threats.